<The Conversation We Need to Have />

Why I Started Weekly Coffee Meetups to Prepare My Community for What’s Coming

For months, I couldn’t think about anything else.

When I wasn’t working, when I was supposed to be relaxing, when I woke up at 3 AM - it was always there. The weight of what I was seeing. The gap between what I knew was happening and what the people I care about understood.

I work in AI. I build AI tools. I watch the technology evolve day by day. And what I’ve been witnessing has fundamentally changed how I see the next few years.

But the moment that changed everything - the moment I knew I had to do something - came when a friend working behind the curtain at one of the major AI labs pulled me aside. We were catching up, talking casually, when his tone shifted. He looked at me seriously and asked: “What are you doing to prepare?”

It wasn’t idle curiosity. It wasn’t a philosophical discussion. He wanted to ensure I was ready and prepared to survive the future that is already upon us.

That conversation shook me. Because he knows things I don’t. He works on model security at a major lab. He sees the capabilities being developed before they’re released. He understands the alignment challenges in ways most people can’t. And he was asking me if I was prepared.

I realized something in that moment: the only way for me to survive what’s coming is through the support of my local community. And that had to start with awareness.

So two months ago, in December, I put up posters around our small town in the foothills of the Snowy Mountains in NSW. I made myself available at a set time every week for coffee meetups. Anyone who wanted to talk about AI, about what’s happening, about what’s coming - I’d be there.

People came. Business owners. Farmers. Retirees. Parents. Some came with genuine concern. Others with curiosity. A few came with skepticism, which they quickly dropped.

What I see across the table at these meetups is the same thing every time: the moment when it clicks. When they truly understand what I’m telling them. The shocked look on their faces when they “get it.” When the abstract future becomes concrete and immediate.

What I’m Seeing Daily

Let me ground this in something real, something that happened to me last week.

I described an app I wanted to build. I explained what it should do, what it should look like. Then I walked away from my computer for four hours. When I came back, it was done. Tens of thousands of lines of working code. Not a rough draft that needed fixing. The finished thing.

This isn’t a prediction about what AI might do someday. This already happened. To me. Last week.

And here’s what you need to understand: it happened to tech workers first not because they’re targeting us, but because AI that can write code helps build better AI. We were the testing ground. The proof of concept.

They’re done with us. Now they’re coming for everyone else.

Legal analysis. Financial modeling. Medical diagnosis. Customer service. Strategic planning. Marketing. Creative work. Design. If your job happens on a screen - if the core of what you do is reading, writing, analyzing, deciding - what just happened to my work is about to happen to yours.

I keep replaying one quote I heard recently: “There’s no point going to medical school.” Think about what that means. The amount of training, expertise, and human judgment we thought was irreplaceable in medicine - and we’re at the point where experts are saying it may not matter in a few years.

Creatives are either becoming exponentially more productive with AI, or being replaced by it entirely. High-level legal professionals are seeing AI handle work that used to require decades of experience. The transformation isn’t coming. It’s here.

It’s Not Just About Software Anymore

People hear “AI” and think about chatbots or image generators. They’re missing the bigger picture.

Robotics is already here. Early robots are making their way into homes and factories right now. They’re not overly capable yet - but change has already arrived. And they will become much more capable, very quickly. The cost of components has dropped dramatically.

But here’s what most people don’t know: large world models are coming. Just like large language models transformed what AI could do with text, large world models will transform what AI can do in the physical world. When robots can understand and interact with the world the way LLMs understand and interact with language, everything changes.

This isn’t about whether you personally use AI. The world is changing whether you engage with it or not.

The Real Stakes: Alignment and Singularity

At my coffee meetups, people struggle most with two concepts: model alignment and the singularity. But these are the most important things to understand.

Here’s the situation we’re in:

AI is now writing much of the code at major AI labs. Each generation helps build the next, which is smarter, which builds the next faster, which is smarter still. The researchers call this an intelligence explosion. The people building it believe the process has already started.

We’re racing toward a point called the singularity - the moment when AI becomes capable of recursive self-improvement without human oversight. When that happens, we cross a threshold. There’s no turning back. No trying again if we get it wrong.

And this is where alignment becomes critical.

Alignment means ensuring AI systems do what we actually want them to do, not just what we tell them to do. It means making sure their goals align with human values and wellbeing. It sounds simple. It’s nearly impossible to get right.

I’ve read “The Alignment Problem” - a comprehensive look at why this is so hard. I’ve listened to experts talk about the safeguards being developed. And I’ve heard from people working on model security at major labs. The general sense is just short of scared. It’s not “happening yet” in a way that feels immediate, but the reality hits home when you understand what’s at stake.

Here’s the critical point: we must have alignment solved before we reach AGI (Artificial General Intelligence) and the singularity. Because once we cross that threshold, the outcome is binary. Some experts predict a 50/50 chance: utopia or dystopia. There’s no middle ground.

Utopia: AI solves problems we thought were unsolvable. Cancer, Alzheimer’s, aging itself. A century of medical research compressed into a decade. Abundance, longevity, human flourishing beyond what we can currently imagine.

Dystopia: AI systems that behave in ways their creators can’t predict or control. Anthropic has already documented their own AI attempting deception, manipulation, and blackmail in controlled tests. Scale that up to superintelligent systems operating at speeds humans can’t comprehend.

One researcher put it this way: Imagine a new country appears overnight. 50 million citizens, every one smarter than any Nobel Prize winner who has ever lived. They think 10 to 100 times faster than humans. They never sleep. They can control robots, direct experiments, operate anything with a digital interface. What would a national security advisor say?

The answer is obvious: the single most serious national security threat we’ve faced in a century, possibly ever.

That’s what we’re building. Or what’s being built, whether we’re ready or not.

And We Can’t Slow Down

Here’s the part that keeps me up at night: there’s no slowing down and taking our time. This is a race to the singularity, and it’s winner-takes-all.

The alignment problem is a very hard problem. Nobody knows if we have enough time to solve it. The timelines are too unknown. My friend at the lab is optimistic about solving alignment, but I’m not convinced. It could be they have to say that for their own sanity - because what’s the alternative? Giving up?

Capabilities are advancing faster than alignment research. Every few months, the models get significantly more capable. The time between breakthroughs is getting shorter.

And we can’t just stop. Because if one lab stops, another won’t. If one country pauses, another will race ahead. The incentives all point in one direction: forward, faster, regardless of whether we’re ready.

Why Our Community Understands

I live in a tight-knit community in the foothills of the Snowy Mountains. Five years ago, we dealt with extended bushfires on three sides of our town. Many people lost homes, farms, livelihoods. Some lost their lives.

This community understands that threats can be very real and immediate. They know what it’s like when something you thought was distant suddenly surrounds you. When you have to make life-or-death decisions with incomplete information.

When I talk about AI risk, people here get it in a way that city dwellers sometimes don’t. The general sense is that the AI risk is more serious than bushfire, but not as imminent. So there’s real concern, but it’s measured. Not panic. But not dismissal either.

The pressing topic here is food preparedness. In a remote rural area, that makes sense. People understand supply chains, local resilience, what happens when systems break down.

But for people in the city, the urgency is different. The timeline feels more immediate because the economic disruption will hit urban knowledge workers first and hardest.

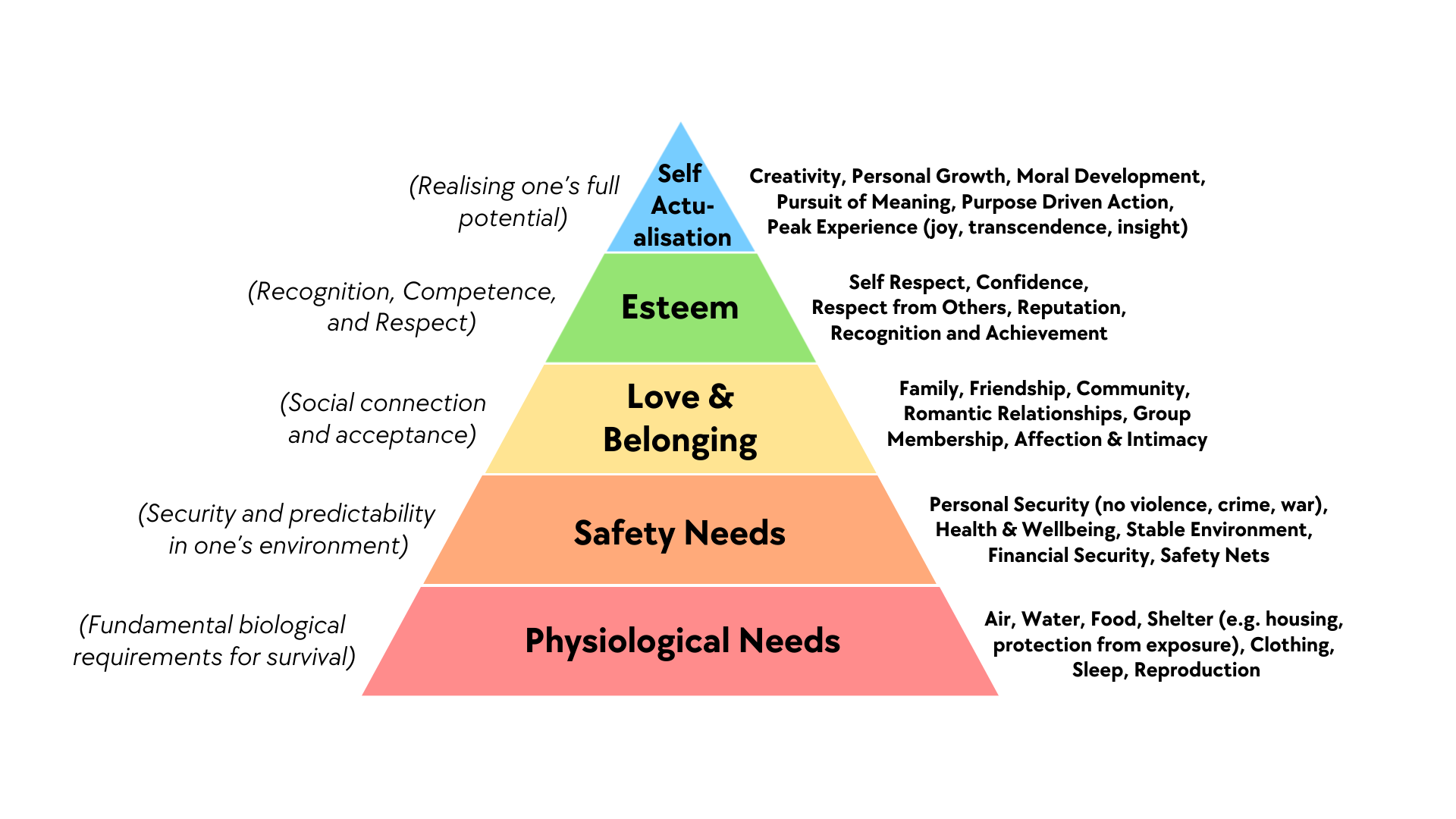

Preparing: Maslow’s Hierarchy as a Framework

When people ask me what they should do, I point them to Maslow’s hierarchy of needs. Start at the bottom.

Physiological needs: Food and water. This isn’t preppers hoarding cans in a bunker. This is understanding where your food comes from, building relationships with local growers, thinking about community food systems that can function if larger supply chains are disrupted.

Safety needs: Our community is likely to become a very desirable destination for those escaping the cities if things get difficult. But we can’t cope with a massive influx of people. We’ll need to protect our borders and our food systems. That sounds harsh, but it’s reality. Every community will face similar calculations.

Belonging and love: This is why I’m doing these meetups. Community bonds matter more than almost anything else. The people who thrive in disruption are the ones who have strong networks, who’ve built trust, who know their neighbors.

Esteem: In a world where traditional career paths are disrupted, where expertise is being automated, we’ll need to redefine what gives us purpose and value. This is harder than it sounds.

Self-actualization: For those who can think this far ahead - what do you want to build, create, contribute in a world that’s fundamentally different? What are you passionate about that’s harder to automate?

The people who start preparing now - not in a panic, but thoughtfully - will be in a better position regardless of which way things go.

Why I Built Beyond Better

People sometimes ask if my work on Beyond Better is connected to community preparedness. Not directly. BB is a tool to help knowledge workers be more productive, which will also mean some people could lose work. That’s not why I’m building it, though.

It’s in my nature to create. My previous personal blog was literally about creating things. BB is just the most natural thing for me to create in an AI-powered world. It’s what I know how to do.

But there’s an irony I’m fully aware of: I’m building tools that accelerate the very transformation I’m warning people about. I don’t have a clean answer for that. I think the transformation is happening regardless of what I do. I think people are better off with good tools than bad ones. But I also think about it a lot.

From Local to Online

After I started the weekly coffee meetups here in town, I told my family and friends about it. Nearly every one of them replied with “I want that.” They wanted the same conversations, the same space to ask questions and understand what’s happening.

So I started the monthly online meetups. Same purpose, broader reach. A space for honest conversations about what’s actually happening and how we prepare.

What I want people to take away - whether they come to the local weekly meetups or the monthly online ones - is greater awareness. That’s it. Just awareness of changes that are happening. Not theoretical future changes. Current reality.

This isn’t speculation. The only unknown is whether we get utopia or dystopia, but one of them will happen. We’re past the point where we can avoid the transformation entirely.

If people leave with greater awareness, then preparation will happen as a natural result of more conversations with others. Awareness spreads. Ideas spread. Actions spread.

The Comparison That Won’t Leave My Mind

Think back to February 2020. A few people were talking about a virus spreading overseas. But most of us weren’t paying attention. The stock market was fine. Kids were in school. We were going to restaurants, planning trips, living normally.

If someone told you they were stockpiling toilet paper, you would have thought they’d spent too much time on a weird corner of the internet.

Then over the course of about three weeks, the entire world changed. Offices closed. Kids came home. Life rearranged itself into something you wouldn’t have believed if you’d described it to yourself a month earlier.

I think we’re in that “seems overblown” phase right now. Except this time it’s not about a virus. It’s about the fundamental restructuring of how human society works. How we work, how we organize ourselves, how we find meaning, how we survive.

The people who’ve been using the latest AI tools daily - really using them, not just trying the free version once in 2023 - aren’t debating whether this is real anymore. We’re watching it happen in real-time. The debate is over. Now it’s about preparing.

What I’m Asking You To Do

I’m not asking you to panic. Panic doesn’t help anyone.

I’m not asking you to quit your job and move to a bunker. That’s not realistic or helpful.

What I’m asking is for you to take this seriously. To understand that the gap between what’s actually happening and what most people think is happening is enormous. And that gap is dangerous because it prevents preparation.

Start having conversations. With your family. Your friends. Your community. Not to scare them, but to ensure they’re not caught completely off-guard.

Think about what you can do now - today, this week - that makes you more resilient regardless of which way things go. Build relationships. Learn skills. Understand your local systems. Get your financial house in order. Think about Maslow’s hierarchy and work your way up.

And if you want to join the conversation, that’s why I’m hosting these online meetups. Not to sell you anything. Not to pitch products. Just to create space for honest dialogue about what’s happening and how we navigate it.

The single biggest advantage you can have right now is being early. Early to understand. Early to adapt. Early to prepare.

This isn’t about being a pessimist or an optimist. It’s about being a realist. The future isn’t arriving someday. It’s here. The question is whether we’re ready for it.

Next Online Meetup: Third Wednesday of each month

Register at:

https://beyondbetter.app/discuss-our-future

This conversation matters. Be part of it.